Justin Sapun,

justin.sapun.th@dartmouth.edu

Arun Guruswamy, Arun.Guruswamy.th@dartmouth.edu

This project implements a realtime audio analysis and video synthesis system using the Zybo Z7-10 FPGA. Audio is streamed from either the onboard I2S codec or a Direct Digital Synthesis (DDS) module, then processed using a Fast Fourier Transform (FFT) pipeline to extract frequency-domain features. These features are used to drive HDMI video effects, enabling dynamic visual output that responds to audio input. The design is built entirely with AXI-Stream and AXI-Lite interfaces, using Xilinx IP and custom VHDL components.

Design Components

-

Zybo Z7-10 FPGA development board

-

I2S Audio Codec (ADAU1761)

-

Direct Digital Synthesis (DDS) module

-

FFT core for real time spectral analysis

-

AXI4-Stream and AXI4-Lite interfaces

-

HDMI timing and video output modules

-

Onboard switches and buttons for mode control

-

Custom VHDL logic and AXI wrappers

Implementation

We structured the system around modular VHDL components, each with isolated responsibilities for audio buffering, FFT analysis, and video transformation. Careful coordination between the audio and video clock domains was key to maintaining synchronization. Debugging involved both simulation and hardware tools like the ILA, which helped uncover subtle issues like timing polarity mismatches and spectral leakage early in the integration process.

Video Generation

To generate visuals on the HDMI display, we built a pixel generator synchronized with a Video Timing Controller and pixel clock. This module dynamically created scenery including a moving square by tracking the current x and y pixel coordinates. Color was applied to pixels falling within the bounds of the square, while other areas remained black. A simple FSM updated the square's position each frame, and we layered in basic background elements like a tree and grass to test spatial rendering. This provided a foundation for later transformation based on audio input.

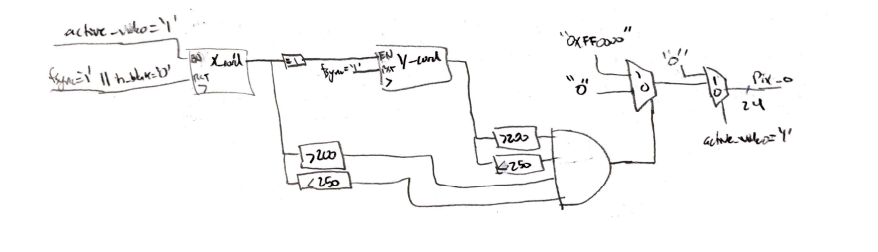

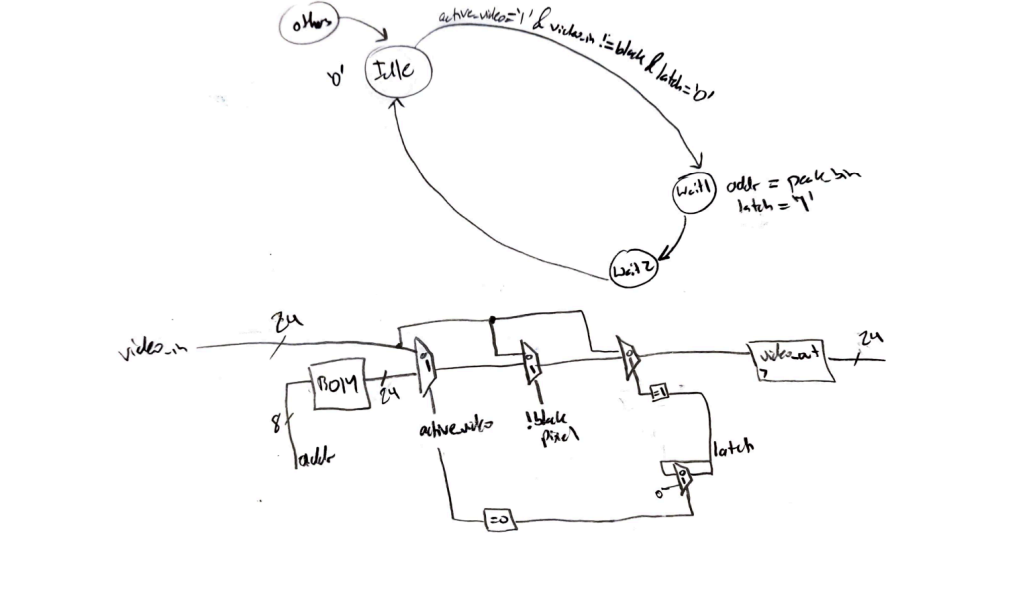

Video Transform

This part of the system handles realtime audio driven video effects. Audio samples from either I2S or DDS

are streamed into a custom AXI FIFO buffer (`axis_fifo.vhd`), where 512 samples are collected at 48kHz and

windowed using a Hanning function. These samples are sent in bursts to a pipelined FFT IP core operating at

100MHz. The FFT output is parsed by `fft_axi_rx.vhd`, which calculates the magnitude squared of each bin and

identifies the peak frequency. This bin index is sent to the `rgb_transform.vhd` module, which scans each

pixel and modifies the color of designated moving objects based on the dominant frequency. A preloaded

colormap stored in BRAM links frequency bins to RGB values, enabling dynamic, audio responsive visuals on

the HDMI output.

The paper design to the left is only the logic for the pixel change in video_transform.vhd post FFT

processing.

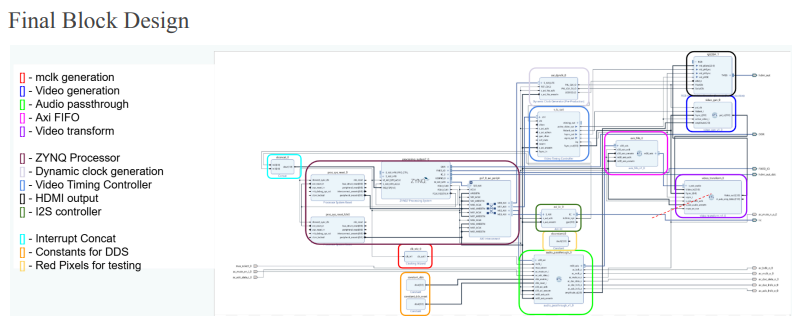

System Integration

All major components were combined into a single top-level design to complete the full audio-to-video processing pipeline. The final block diagram includes the I2S/DDS audio input, AXI FIFO for buffering, FFT IP core, frequency analysis logic, and video generation and transformation modules. Control inputs like mute and source select were wired through AXI-Lite, and synchronization between domains was managed using separate audio and video clocks. This fully integrated system enabled realtime HDMI output that reacts dynamically to audio signals in hardware.

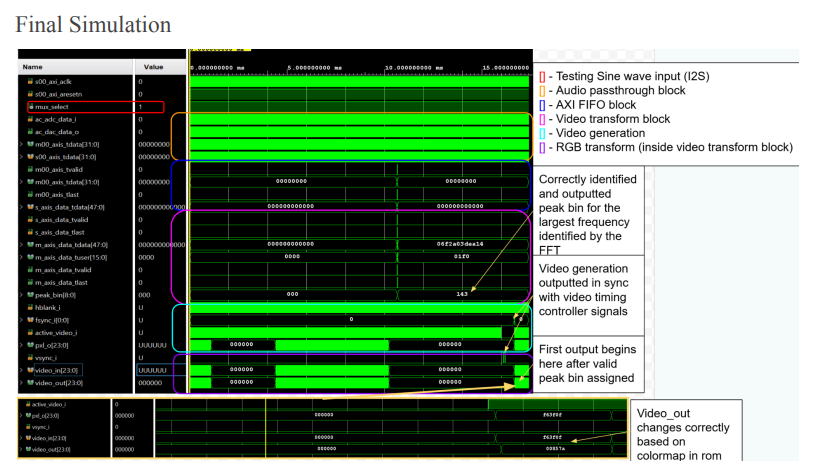

System Verification

Throughout development, we created individual testbenches for each module to verify functionality in isolation. This final testbench simulated the complete integrated system, including audio input, FFT processing, and video transformation. Due to the slow 48kHz audio clock, simulation runtime was long, but the testbench successfully validated end-to-end behavior and allowed us to proceed confidently with hardware testing.

Demo

In the demo video, we play a YouTube sweep tone from 0Hz to 20kHz. The system tracks the dominant frequency in realtime, changing the color of the moving square on screen as the detected peak bin shifts with the audio.

Considerations

While the system architecture worked as intended, the FFT output introduced unexpected challenges during implementation. Spectral leakage caused instability in peak frequency detection, leading to rapid color shifts on screen. To address this, we applied a Hanning window to the buffered audio samples before FFT processing. This reduced but did not fully eliminate the effect, highlighting the nuance of realtime signal analysis in hardware.

Skills

We gained experience in VHDL design, AXI-Stream and AXI-Lite protocols, FFT-based audio processing, and HDMI video synchronization. The project involved clock domain management, simulation with custom testbenches, and debugging using Vivado and the Integrated Logic Analyzer (ILA). We also applied windowing techniques to reduce spectral leakage in real-time signal analysis.